Last Updated February 6, 2012

Environmental audio (or spatial sound) refers to a set of technologies and techniques to simulate virtual or remote audio environments.

Sound has many potential applications in the areas of virtual reality and telepresence. Spatial sound could help increase the sense of presence in virtual environments by relaying information about the environment and the objects within it. Such environmental awareness could be very beneficial in increasing the user's orientation in virtual environments.

This folder contains a couple of applications which let you play around with various 3D audio effects. The folder should be installed on the root of the C: drive in order for the applications to work correctly.

3D audio is an attempt to widen the stereo image produced by two loudspeakers or stereo headphones, or to create the illusion of sound sources placed anywhere in 3 dimensional space, including behind, above or below the listener. This is achieved using reverberation and other effects or by simulating the impact that the human body and head have on received audio (HRTF)

Surround Sound is used to create a more more realistic audio environment by expanding audio playback(audio imaging) from one dimension (stereo) to two or three dimensions. Surround sound typically uses 3 or more channels and normally one speaker per channel. There are many different surround sound systems ranging from 3 to 24 channels. The most popular surround sound system is the 6 channel 5.1 Dolby Digital or DTS systems.

This technology was used in ID Software's DOOM. Every mono sound sources is played as stereo, and their positioning can be altered with the left- or right-channel's volume level. Such system has no vertical positioning but it's possible to change the sound a little (for example, by filtering high frequencies) when it comes from behind the listener because in this case he hears it a little muffled.

HRTF (Head Related Transfer Function) is a transfer function which models sound perception with two ears to determine positions of the sources in space. Our head and body are actually obstacles modifying the sound, and our ears hidden from the sound source perceive sound signals altered; then the signals proceed to our head to be decoded in order to determine the right position of the sound source in space.

Downsides of HRTF

1. Sound can be badly distorted.

2. Operation can be pretty slow.

3. If sound sources are immovable, their positions can't be determined precisely, because the brain needs them moving (movement of the source or subconscious micro-movements in the listener's head), which helps to determine a sound source position in the geometrical space.

It's typical of people to turn their heads towards unexpected sounds. When the head's turning, the brain gets additional information defining the sound's position in space.

4. Headphones give the best results. Headphones make it simpler to solve the problem of delivering one signal to one ear and another signal to another ear. Moreover, some people do not like headphones, even light wireless models.

Besides, the fact that a sound source seems to be much closer when the player has headphones on should also be accounted for.

When speakers are used, crosstalk cancellation is necessary to "remove" left ear audio from reaching the right ear and visa-versa. Realtime crosstalk cancellation requires significant processing power. Even then the speakers and listener need to be placed fairly accurately and the sweet spot is quite small.

One of the main features of a sound engine is distance effects. The farther the sound source, the quieter it is. One of the simplest models is lowering the volume level at farther distances: the sound designer must assign a certain minimal distance out of which the sound starts fading out. While the signal is within this distance, it does not attenuate; when it crosses the minimum distance it loses -6 dB with the first meter away from the sound, another -6db after 2 meters, another -6dB after 4 meters, and will continue to lose -6dB as the distance is doubled. The equation for calculating sound attenuation is below;

$$\begin{eqnarray*} L_{2}=L_{1}-20\lg(\frac{r_1}{r_2}) \end{eqnarray*}$$It will keep on getting quieter until it reaches the Maximum distance, where it's too far to be heard. When this distance is reached the sound can keep on dying out until it comes to the zero volume level, but it's better to turn off such sounds to free the resources. The farther the maximum distance, the longer the sound will be heard.

In the real world high frequency sounds are absorbed by the atmosphere (HF Roll off). This effect is greater when the air is damp.

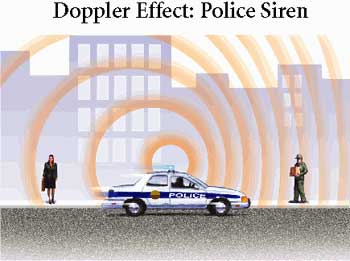

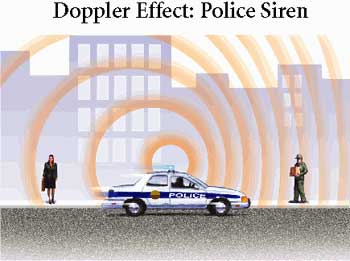

The Doppler effect is observed when the wavelength changes as the source approaches or moves away. When the sound source is nearing the wavelength shortens, and when it's moving away it grows in accordance with the special formula. Racing or flight simulators benefit most of all from the Doppler effect. In shooters it can be used for rockets, lasers or plasma, i.e. in any objects that move very fast.

To create the effect of full submersion into the game it's necessary to calculate the acoustic environment and its interaction with sound sources. As the sound propagates, the waves interfere with the environment.

This is the audio equivalent to raytracing

Sound waves can reach the listener in different ways:

The sound can be modified by the environment;

Wavetracing algorithms analyze the geometry describing the 3D space to determine ways of wave propagation in the real-time mode, after they are reflected and passed through passive acoustic objects in the 3D environment.

The audio polygon has its own location, size, shape and properties of the material it's made of. Its shape and location are connected with the sound sources and listener and influence how each separate sound is reflected or goes through or round the polygon. The material properties can change from transparent for sounds to entirely absorbing or reflecting.

As a result, the sound becomes much more realistic: the combination of the 3D sound, acoustics of the rooms and environment and accurate representation of audio signals to the listener.

However realtime wavetracing requires far more processing than is available on current generation games machines.

Reverberations (reverb) is an audio effect which can approximate the audio quality of enclosed environmental sound. The works by effect is to repeat the sound to simulate 1st order and second order reflections.

The reverberation time is controlled primarily by two factors - the surfaces in the room, and the size of the room. The surfaces of the room determine how much energy is lost in each reflection. Highly reflective materials, such as a concrete or tile floor, brick walls, and windows, will increase the reverb time as they are very rigid. Absorptive materials, such as curtains, heavy carpet, and people, reduce the reverberation time (and the absorptiveness of most materials usually varies with frequency).

The following parameters can be used to control a reverb effect

reverb time - decay time

reverb mix - ratio of direct and reflected sound

high frequency ratio - HF is absorbed by soft surfaces

diffusion and density of late echoes

Roll-of factor- controls distance

Pre delay- time before first reflections are heard

Audio effects add a lot of character to a sound and allow a particular sound to be modified and distorted so that it can be used in several very different situation or to build an entirely "new" sound.

An original (unmodified sound) is called a dry sound, a sound which has had an effect applied is called a wet sound

Audio special effects can enhance a sound in a number of ways

Types of Audio effects

Time domain effects are effects which repeat parts of the sound to simulate sound reflections. Reverb is the most important time domain effect and is treated separately.

An echo occurs when there is a perceptible delay between the original sound and its refection. For an echo to occur, there must be a large reflective surface far enough away that it takes sufficient time for the sound to travel and be reflected back.

Parameters

The chorus effect is aptly named, because the goal of the effect is to create

copies of the

original sound in a way that tricks the listener into believing that he or she

is hearing many

sounds in unison—a chorus. The effect is achieved by creating a duplicate

of the source

using a short delay, and then modulating the delay time.

By modulating the delay time, an

impression can be created that many sounds are being created at the same time.

Modulating

the delay time also introduces slight variations in pitch, which adds complexity

to

the effect. The effect is not convincing in every instance, but it can make

ensemble

sounds seem bigger. It can be a pleasing effect on musical instruments like

acoustic guitars,

and can be an interesting special effect when used to create unnatural sounds.

The modulation effect is achieved by using a low-frequency oscillator (LFO).

The

LFO creates a fundamental waveform—in this case, a sine wave or a triangle

wave. At a

given instant in time, the phase of the LFO is used as a factor to determine

the delay time.

The frequency and shape of the LFO waveform help to determine the character

of the

chorus effect.

The chorus effect has the following parameters:

Delay; represents the baseline time for the echo—the

delay time without LFO modulation. The range of possible values for this parameter

falls into the category of doubling echo. This means that the time is too short

for humans to perceive the echo as a discrete occurrence (the default delay

is 16 ms); rather, it sounds like a doubling of the original signal. Shorter

delay times make the doubling effect less obvious.

Depth; enables you to adjust the range of variance from the

baseline

delay time. You can think of this as a level control for the LFO. The greater

the depth

value, the greater the range of delay times created by the LFO. Depth is specified

as a percentage

value, with 100 percent yielding the greatest variation in the delay time.

When creating chorus effects for musical instruments, you should use the depth

parameter judiciously. High depth values tend to make the modulation effect

of the LFO

very obvious, which may be desirable for special effects, but can make a musical

instrument

effect sound unnatural.

Frequency; frequency of the LFO waveform

Wet/dry mix

Feedback; As with the echo effect, the feedback parameter represents the percentage of the output signal mixed back into the input buffer. Unlike the echo effect, the chorus effect lets you use a negative value for feedback. When you specify a negative value, the parameter represents the percentage of processed signal subtracted from the input. In either case, the goal is to add to the complexity of the chorus effect by compounding the processing. Large amounts of positive or negative feedback can drastically change the character of the original sound, because phase interactions introduce frequency-dependent cancellations and reinforcements to the original signal. This can add interesting coloration ranging from hollow to metallic or strangely electronic-sounding. While this type of destructive alteration of the source material might not be appropriate for standard musical fare, it might be just the effect that you want for an alien sound.

Sound waves occur in a periodic manner. The number of times that one cycle of

a wave

repeats during a given time interval is called the frequency of the wave. For

sound waves,

frequency is measured in cycles per second, or hertz (Hz).

Sound frequency is analogous to pitch; the higher the frequency, the higher

the perceived

pitch. The generally accepted range for audible frequencies is from 20 Hz to

20,000 Hz (20 kHz).

Complex sound waveforms, like the human voice or a piano note, are actually

composed

of a fundamental sine wave and a number of additional sine waves, called harmonics.

The fundamental and the harmonics each occur at different frequencies, with

the harmonics

occurring at successively higher multiples of the fundamental frequency. When

you hear a note played on a piano, the pitch that you perceive for the note

is the pitch of

the fundamental frequency. It is the combination of the fundamental and the

harmonics

that give the piano its unique character, or timbre.

Frequency domain effects change the relationship between the various components of a complex waveform by changing the amplitude within a given frequency range. This process is commonly called equalization, and the devices (real or virtual) that create this effect are called equalizers. The most common equalizers are the bass and treble controls that can be found on most home stereo equipment.

This effect alters the frequency effect of a sound by boosting or attenuating a range of frequencies. An equalizer can be used to simulate various audio filters

Center; frequency at which maximum attenuation/boots

Bandwidth; range of frequencies about the center

Gain; amount of boost/attenuation in decibels

Amplitude domain effects process sound volume. The unit of measurement for

sound volume is the decibel (dB).By itself, the decibel has little meaning;

it is meaningful only when referenced to some standard level. Therefore, it

is not useful to say, “The volume of the sound is 85 dB,” unless

it is understood to mean that the sound is 85 dB louder than the threshold of

hearing. It is useful, and common, to use positive values to refer to volume

increases above some reference level, and to use negative values to refer to

volume decreases below the reference. Therefore, it is useful to say, “The

threshold level is set at -50 dB,” if, for instance, it is understood

to mean that the threshold is 50 dB below some predetermined level, like digital

full scale.

The range of possible volumes that can be represented by a given system, analog

or digital, is called the dynamic range. For digital systems, each doubling

of the level yields a 6 dB increase in volume. Conversely, each halving of the

level yields a 6 dB decrease in volume. Given that each binary digit corresponds

to a doubling of available values, a 16-bit representation of audio can effectively

represent a 96 dB dynamic range (16 x 6 = 96). A particular sound or soundtrack

can have its own dynamic range as well, which clearly must be less than or equal

to the available dynamic range of the system.

The simplest amplitude domain effect is the gargle effect. As you can imagine,

this effect

causes the audio material to sound like gargling, by using an LFO to modulate

the amplitude

of the sound.

Rate Parameter; enables you to specify the frequency of the LFO that

creates the effect. Higher values for yield faster-sounding gargle effects.

Waveform Parameter; enables you to specify the type of waveform

created by the LFO. Applying a triangle wave shape to the LFO creates a gargle

effect with

a sharp character. Applying a square wave shape to the LFO creates a gargle

effect with an

even more abrupt modulation. Which one you choose is a matter of taste and application.

You should experiment with each waveform shape to hear for yourself the effect

that it

creates.

Distortion (specifically, harmonic distortion) is a change in the sound that happens when the amplitude of the signal exceeds the available range. The result is that additional harmonic artifacts are created as the shape of the waveform is changed, or “clipped”. You have probably heard distortion before; it is that fuzzy sound that happens when, for instance, a speaker is damaged. Usually, distortion is something that audio engineers seek to avoid because it changes the sound in unwanted ways. However, sometimes distortion is a desirable effect, most frequently used in electric guitar amplifiers. The signal that an electric guitar creates tends to have a clean, pure sound. Deliberately adding some distortion to the signal can make the sound more interesting.

Of course, you don't have to use the distortion effect strictly to enhance musical instruments. You might use it to create a special effect for your game. For example, this effect can create the impression of a noisy radio transmission. The distortion effect exposes the following parameters:

Edge Parameter; enables you to specify the intensity of distortion

in the output

signal. (Distortion is often characterized as having an “edgy” sound.)

Values close to 100

percent tend to make the output signal sound unintelligible, so usually you

will want to

use values less than 50 percent.

Post-EQ Bandwidth Parameter; lets you adjust the range of frequencies

(relative to the center frequency) that are boosted. You specify this range

in hertz.

Wider-range values result in more harmonics being generated, thereby resulting

in

denser-sounding distortion.

Pre-low-pass Cutoff Parameter; Because the harmonics that make

up the audible distortion signal are higher multiples of fundamental frequencies,

it is useful to filter out high-frequency content from the original signal before

creating distortion. To enable this, the distortion effect provides the pre-low-pass

cutoff parameter. This value represents the frequency above which all frequencies

are attenuated.

Gain Parameter; lets you adjust the volume of the output of

the effect.

Dynamic compression is the process of reducing the dynamic range of a signal

in

response to changes in signal level. In the analog world, this is accomplished

by using an

amplifier with an output gain that varies in response to the input level. When

the amplifier’s

input exceeds a predetermined level, called the threshold, the circuitry reduces

the output

gain by a predetermined amount. The amount of gain is usually expressed as a

ratio. For

example, a 3-to-1 ratio means that for every 3 dB by which the input signal

exceeds the

threshold, only 1 dB of gain occurs at the output.

Dynamic compression can be used for several purposes. First, it is useful to

help

prevent an input signal from exceeding the dynamic range of the medium. For

instance,

if you were to record a source with a 110 dB dynamic range as a 16-bit digital

signal, you

would need to compress the dynamic range by about 14 dB because 16-bit digital

audio

has a 96 dB dynamic range. To be absolutely sure that the signal never exceeds

the

dynamic range, you can use a very high compression ratio with a threshold close

to the

limit of the medium. This type of compression is called limiting.

Dynamic compression is also used as an effect. A little bit of compression can

help

make a musical performance sound better. For instance, if a particular vocalist

is inconsistent

with his or her microphone usage, a compressor can even out the dynamics. If

the

vocalist is performing with a loud band, the compression can ensure that each

syllable of

each word can be heard and understood. Keep this idea in mind when mixing sounds

for

your game soundtrack, because the compressor effect might be able to fix problems

in

the source sounds.

Threshold Parameter; You can set the threshold for the compressor

effect by specifying a value for the threshold parameter. This parameter lets

you set the volume level at which the compression effect engages. This parameter

has a maximum value of zero, which represents digital full scale. Other values

are specified in decibels below full scale. The value that you choose for this

parameter depends on the dynamics of the source material and the effect you

are trying to achieve. If you set the threshold higher than the maximum signal

level, no compression will occur. If you set it too low, you risk limiting the

dynamics of the source material, which can make the audio turn “dark”

or

muddy sounding.

Ratio Parameter; The ratio parameter lets you specify how much

gain reduction occurs when the threshold level is exceeded. For instance, a

value of 3 represents a 3-to-1 compression ratio. Again, the value you choose

for this parameter depends on the content. High values (greater than 10) make

the effect behave like a hard limiter. This can be useful if you want to ensure

that the volume of the material does’t ever exceed some particular level.

The range between 2 and 10 represents the most commonly used settings. In this

range, the effect can be more subtle, less noticeable, while still helping to

smooth out the dynamics of the content.

Pre-delay Parameter Some sounds start with a sharp part, called

the attack, followed by a lower-level sustained portion, which is then followed

by a decaying portion, called the release. For example, think of a piano note.

When you press a piano key, a hammer inside the piano momentarily strikes a

taut wire. The hammer strike creates the attack portion of the sound. Once

the hammer moves away from the wire, the note sustains and decays for as long

as the

piano key is depressed.

Now, suppose you want to apply some compression to a piano soundtrack. You

want to help even out the dynamics of the performance to keep it audible, but

you also

need to maintain the relationship between the hammer strikes and the sustaining

notes.

In other words, you don’t want the compressor effect to engage every time

that a hammer

strikes a wire, because this would change the very nature of how the piano sounds.

You can prevent the compressor effect from functioning too soon by specifying

a

value for the pre-delay parameter. Pre-delay introduces a very short hesitation

before the compressor effect engages after the compression threshold is exceeded.

This

is an ideal way to prevent compression of the attack portion of a sound.

Attack Parameter; The attack parameter enables you to specify

how long it takes for compression to reach the specified ratio. For instance,

suppose you set a ratio of 10 to 1. If the compressor effect reduces the volume

by that ratio instantly each time the level exceeds the

threshold, the result can be a “pumping” effect when the volume

changes introduced by

compression become obvious. Specifying a longer attack time causes the effect

to ease in

more gradually. Conversely, if you want the compression to happen right away,

specify a

very short time for the attack parameter.

Release Parameter; Like the attack parameter, the release parameter

affects the way the compressor effect behaves over time. When the level drops

below the threshold, the compression

takes some amount of time to disengage, or release. How suddenly this happens

depends

on the time that you specify for the release parameter.

Gain Parameter; The gain parameter allows you to change the

output level of the effect. Typically, this is most useful for “make-up

gain.” Often, the end result of applying the compressor

effect is that the average volume of the sound lowers. By adding some gain

after the compression, you can restore the apparent volume level. This helps

to make the softer portions sound louder.

Directsound was designed to be the primary audio API for games on windows. Until DirectX9.0 , Directsound was used to interface with audio hardware, which implemented positional audio and audio effects. DirectSound3D, DS3D, is the positional element of the direct sound API.

For DirectX10+ (& Vista & Win 7) direct sound will no longer be able to access audio hardware, instead will use vista's software based sound mixer and effects system. This will significantly reduce the audio capabilities of applications with use DirectSound and impose a significant processing burden.

It is believed that this move is to allow for more secure DRM.

Environmental audio extensions (EAX) are a set of Environmental Audio effects available on Creative Labs audio cards together with an set of extensions to the direct sound API & OpenAL API.

The basic functionality of EAX is to provide a number of different preset reverb environments, later additions included support for environmental morphing and occlusions.

EAX has become the de facto standard for environmental audio prcoessing on the PC .

EAX is an audio processing layer controlled by an API like OpenAL.

XNA Audio Creation Tool (XACT) is a PC/XBox360 based development system, integrated with XNA (althought XACT can be used in other development environments). The XACT Audio Authoring Tool is a companion application used to organize audio assets

OpenAL is a cross platform audio Library, deliberately designed to look similar to OpenGL. Technically, OpenAL is not an open standard as it is controlled by Creative Labs, however, the source code is freely available.

OpenAL is used by games engines like Doom3 & Unreal.

The removal of hardware support for DirectSound in DirectX10 (Vista) has meant may mean that OpenAL is becomeing the standard free audio platform for windows games.

MOD is a proprietary audio library, cross platform but is not free for comercial use. FMD is probably the most dominant audio engine in use today.

Turcan, P., Watson, M. Fundamentals of Audio and Video Programming, Microsoft Press 2004

Menshikov, A. "Modern Audio Technologies in Games" Digit-life.com, http://www.digit-life.com/articles2/sound-technology/index.html (accessed December 2, 2006).

Wikipedia contributors, "Surround sound," Wikipedia, The Free Encyclopedia, http://en.wikipedia.org/w/index.php?title=Surround_sound&oldid=99210469 (accessed January 12, 2007).

Wikipedia contributors, "Head-related transfer function," Wikipedia, The Free Encyclopedia, http://en.wikipedia.org/w/index.php?title=Head-related_transfer_function&oldid=61119155 (accessed January 12, 2007).

© Ken Power 1996-2016