Last Updated February 4, 2010

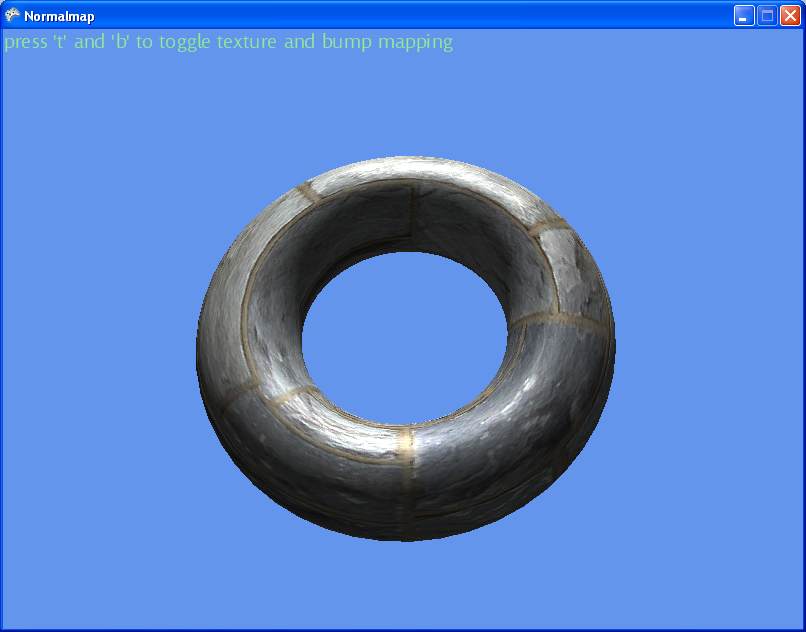

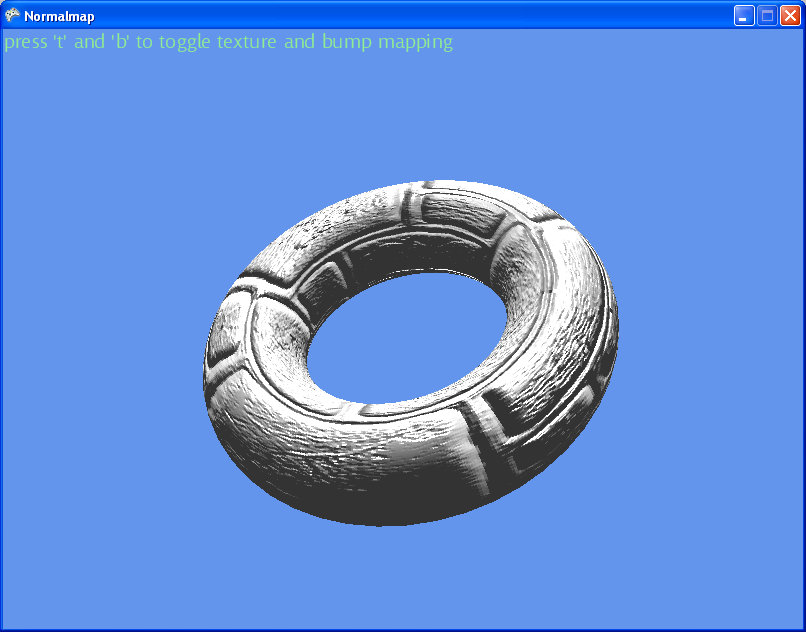

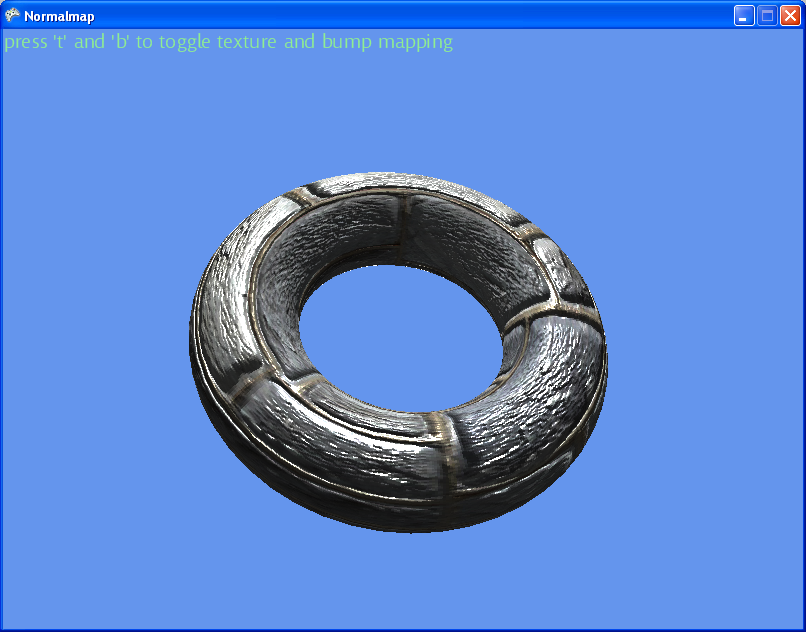

This flash app shows normal mapping on a Halo model.

This is the full project (XNA3.1) based on the following code

During Normal mapping, Tangent and BiNormal information need to be provided for each vertex, along with Position, texturecoordinate and normals. XNA does not currently support a vertex format which can handle these 5 properties.

The following code shows a struct which can be used for specifying vertices during normal mapping.

public struct VertexPosTexNormalTanBitan

{

Vector3 pos;

Vector2 tex;

Vector3 normal, tan, bitan;

public static readonly VertexElement[] VertexElements =

new VertexElement[] {

new VertexElement(0,0,VertexElementFormat.Vector3,VertexElementMethod.Default,VertexElementUsage.Position,0),

new VertexElement(0,sizeof(float)*3,VertexElementFormat.Vector2,VertexElementMethod.Default,VertexElementUsage.TextureCoordinate,0),

new VertexElement(0,sizeof(float)*5,VertexElementFormat.Vector3,VertexElementMethod.Default,VertexElementUsage.Normal,0),

new VertexElement(0,sizeof(float)*8,VertexElementFormat.Vector3,VertexElementMethod.Default,VertexElementUsage.Tangent,0),

new VertexElement(0,sizeof(float)*11,VertexElementFormat.Vector3,VertexElementMethod.Default,VertexElementUsage.Binormal,0),

};

public VertexPosTexNormalTanBitan(Vector3 position, Vector2 uv, Vector3 normal, Vector3 tan, Vector3 bitan)

{

pos = position;

tex = uv;

this.normal = normal;

this.tan = tan;

this.bitan = bitan;

}

public static bool operator !=(VertexPosTexNormalTanBitan left, VertexPosTexNormalTanBitan right)

{

return left.GetHashCode() != right.GetHashCode();

}

public static bool operator ==(VertexPosTexNormalTanBitan left, VertexPosTexNormalTanBitan right)

{

return left.GetHashCode() == right.GetHashCode();

}

public override bool Equals(object obj)

{

return this == (VertexPosTexNormalTanBitan)obj;

}

public Vector3 Position { get { return pos; } set { pos = value; } }

public Vector3 Normal { get { return normal; } set { normal = value; } }

public Vector2 Tex { get { return tex; } set { tex = value; } }

public Vector3 Tan { get { return tan; } set { tan = value; } }

public Vector3 Bitan { get { return bitan; } set { bitan = value; } }

public static int SizeInBytes { get { return sizeof(float) * 14; } }

public override int GetHashCode()

{

return pos.GetHashCode() | tex.GetHashCode() | normal.GetHashCode() | tan.GetHashCode() | bitan.GetHashCode();

}

public override string ToString()

{

return string.Format("{0},{1},{2}", pos.X, pos.Y, pos.Z);

}

}

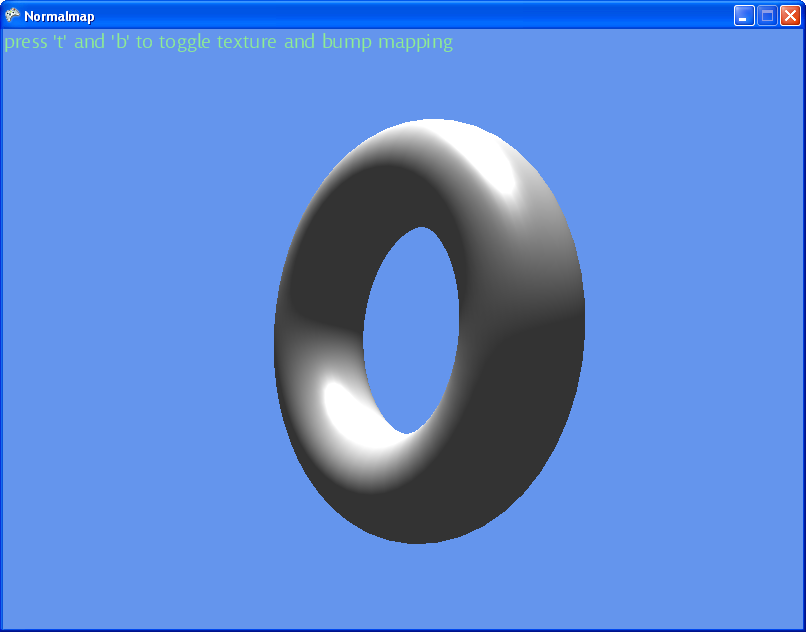

Because the an object is moving with respect to the eye and lights and a normal map show normal s for one orientation only, there is a proble with reconcilling the normal as per the normal map and the surface of the object. The normal as provided by the normal map is only valid in 'texture space'

We solve this by bringing the light vector and half vector into texture space and doing the lighting calculations there. The transformation to texture space for vertex is obtained from the vertex normal, and two vectors called the BiNormal and the Tangent (these are all mutually perpendicular). These vectors need to be specified for every face in the model.

Combining these three vectors will create a matrix which will transform a vector from object space into texture space.

Once in texture space, the lighting calculations can be carried out.

VS_OUTPUT vs_BumpandTextureMap(float4 inPos: POSITION, float3 inNormal: NORMAL,

float3 inTangent:TANGENT, float3 inBinormal:BINORMAL,

float2 inTxr: TEXCOORD0)

{

VS_OUTPUT Out;

// Compute the projected position and send out the texture coordinates

Out.Pos = mul(inPos,view_proj_matrix);

Out.TexCoord = inTxr;

// Determine the distance from the light to the vertex and the direction

float4 LightDir;

LightDir.xyz = normalize(mul(light_position,inv_world_matrix) - inPos);

// Determine the eye vector

float3 EyeVector = normalize(mul(view_position,inv_world_matrix)-inPos);

//calc half vector

float3 HalfVector = normalize(LightDir.xyz+EyeVector);// average of the two vectors

// Transform to texture space and output

// half vector and light direction

float3x3 TangentSpace;

TangentSpace[0] = normalize(inTangent);

TangentSpace[1] = normalize(inBinormal);

TangentSpace[2] = normalize(inNormal);

TangentSpace=transpose(TangentSpace);

//transform vectors to texture space

Out.HalfVect = float4(mul(HalfVector.xyz,TangentSpace),1);

Out.LightDir = float4(mul(LightDir.xyz,TangentSpace),1);

Out.Normal=inNormal;

return Out;

}

float4 ps_BumpandTextureMap(float2 inTxr:TEXCOORD0,float4 LightDir:TEXCOORD1,

float3 HalfVect:TEXCOORD2) : COLOR

{

// Read normal map

//texture values are stored in range 0 to 1, convert to range -1 to +1

float3 normal = tex2D(Bump,inTxr) * 2 - 1;

// Output lighting color and texture map

return tex2D(Texture0,inTxr)*(Light_Point(normal,HalfVect,LightDir));

}

© Ken Power 1996-2016