Last Updated February 26, 2009

Once a 3D model has been completed, its co-ordinates need to be converted to 2 dimensions in order to display the scene on a flat computer monitor or to print it on paper. This process is called projection. The visual appearance of a 3D model depends greatly on the position of the viewer (among other things), so this must be taken into account when projecting a model. There are two main types of projection available, parallel and perspective.

Parallel projections are used by drafters and engineers to create working drawings of an object which preserves scale and shape. In parallel projection, image points are found at the intersection of the view plane with a ray drawn from the object point and having a fixed direction. The direction of projection is the same for all rays (all rays are parallel). A parallel projection is described by prescribing a direction of projection vector \mbox{$\vec{\mathbf{v}}$} and a viewplane. The object point P is located at (x,y,z) and we need to determine the image point coordinates P'(x',y',z'). If the projection vector \vec{\mathbf{v}} has the same direction as the viewplane normal, then the projection is said to be orthogonal, otherwise the projection is oblique.

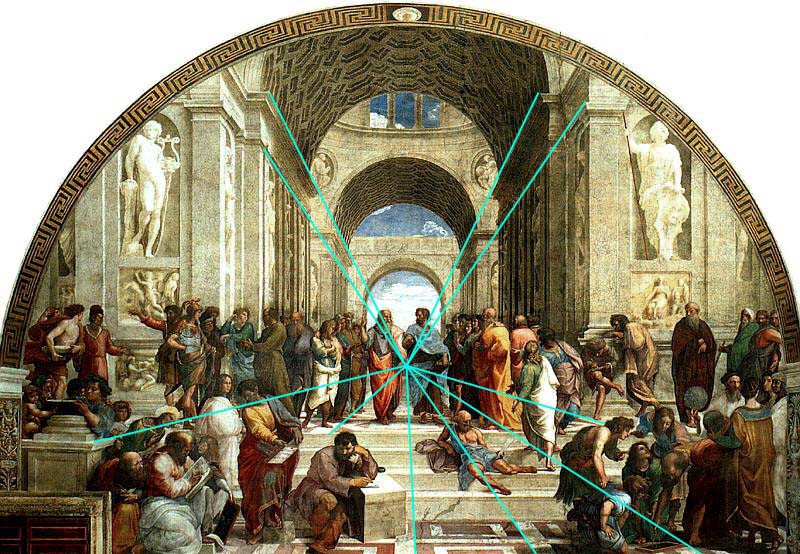

Perspective projections are used to produce images which look natural. When we view scenes in everyday life far away items appear small relative to nearer items. This is called perspective foreshortening. A side effect of perspective foreshortening is that parallel lines appear to converge on a vanishing point. An important feature of perspective projections is that it preserves straight lines, this allows us to project only the end-points of 3D lines and then draw a 2D line between the projected endpoints.

Perspective projection depends on the relative position of the eye and the viewplane. In the usual arrangement the eye lies on the z-axis and the viewplane is the xy plane. To determine the projection of a 3D point connect the point and the eye by a straight line, where the line intersects the viewplane. This intersection point is the projected point.

Perspective projections, while providing a realistic view of an object, are rather restrictive. They require that the eye to lie on a coordinate axis and that the viewplane must coincide with a coordinate plane. If we wish to view an object from a different point of view, we must rotate the model of an object. This causes an awkward mix of modelling (describing the objects to be viewed) and viewing (rendering a picture of the object). We will develop a flexible method for viewing that is completely separate from modling, this method is called the synthetic camera. A synthetic camera is a way to describe a camera (or eye) positioned and oriented in 3D space. The system has three principle ingredients:

The view plane is defined by a point on the plane called the View Reference Point(VRP) and a normal to the viewplane called the View Plane Normal(VPN). These are defined in the world coordinate system. The viewing coordinate system is defined as follows:

In order for a rendering application to achieve the required view, the user would need the specify the following parameters.

To Choose a VPN( \vec{n}), the user would simply select a point in the area of interest in the scene. The vector \vec{n} is a unit vector, which can be calculated as follows;

The user should select some point in the scene which (s)he would like to appear as the center of the rendered view, call this point \stackrel{\longrightarrow}{scene}. The vector \stackrel{\longrightarrow}{norm}, a vector lying along \vec{n} can then be calculated:

\vec{n} must be a unit vector along \stackrel{\longrightarrow}{\mathbf{norm}};

Finally the upward vector must be a unit vector perpendicular to \vec{\mathbf{n}}, let the user enter a vector \stackrel{\longrightarrow}{\mathbf{up}} and allow the computer to calculate an appropriate vector \vec{\mathbf{v}}.

The vector \vec{\mathbf{u}} can now be calulated \vec{\mathbf{u}}=\vec{\mathbf{n}} \times \vec{\mathbf{v}}. With the viewing cordinate system set up, a window in the viewplane can be defined by giving minimum and maximum u and v values. The centre of the window (CW) does not have to be the VRP. The eye can be given any position (\mbox{$\vec{\mathbf{e}}$}=(e_{u},e_{v},e_{n})) in the viewing coordinate system. It is usually positioned at some negative value on the n-axis, \mbox{$\vec{\mathbf{e}}$}=(0,0,-e_{n}).

The components of the synthetic camera can be changed to provide different views and animation effects;

Below is a Java Applet which demonstrates the effects of the synthetic camera. Modify the camera in the right panel. The left panel shows the view from the eye.

We have developed a method for specifying the location and orientation of the synthetic camera. In order to draw projections of models in this system we need to be able to represent our real-world coordinates in terms of \vec{\rm{u}}\vec{\rm{v}}\vec{\rm{n}}.

Converting from one coordinate system to another:

writing the above as a dot product of vectors;

Combining the the above displacement with the matrix multiplication into a homogeneous matrix, we get;

We will refer to the above matrix as \mathbf{\hat{A}}_{wv} (world to viewing coordinate transformation). We can now write our coordinate transform as:

The above transformation can be reduced to three simpler relations for computation;

We now have a method for converting world coordinates to viewing coordinates of the synthetic camera. We need to transform all objects from world coordinates to viewing coordinates, this will simplify the later operations of clipping, projection etc. We should have a separate data structure to hold the viewing coordinates of an object. The model itself remains uncorrupted and we can have may different views of the model.

This equation is valid for values of t between 0 and 1. We wish to find the coordinates of the ray as it pierces the viewplane, this occurs when r_{n}(t)=0, the best way to do this is to find what 'time' t the ray strikes the viewplane so;

This gives us coordinates of the point (u,v,n) when projected on to the view plane. If the eye is on the n-axis, which is the usual case, then both e_{u} & e_{v} are zero, thus u' and v' simplify to;

Note that u' and v' do not depend on t, this means that every point on the ray projects to the same point on the viewplane. Even points behind the eye (t<0) are projected to the same point on the viewplane. These points will be eliminated later.

When manipulating 3D entities it is useful to have an additional quantity which retains a measure of depth of a point. As our analysis stands we have lost information about the depth of the points because all points are projected onto the viewplane with a depth of zero. We would like to have something which preserves the depth ordering of points, this quantity will be called pseudodepth, and to simplify later calculation we will define it as;

An increase in actual depth p_{n} causes an increase in n as required. The simplified equations for u',v' and n' can be re- written as follows:

We can now write a matrix to implement the above transformation, this is called the Perspective Transformation:

The projection P' of a point P can now be written as:

If the eye is not on the n-axis, the effect of shear must be taken into account, this is implemented by another transformation:

where;

At this stage we have a method for transforming a point from world-coordinates to viewing coordinates and then projecting that point onto the view plane, i.e.

Shifting the eye off the n-axis is only useful for stereo applications, so we can fix the eye to the n-axis and eliminate \mbox{${\mathbf{\hat{M}}}$}_{s} for general use. It is now possible to combine the coordinate transformation and projection transformation into one matrix.

The human brain perceives depth in a scene because we have two eyes separated in space, so each eye "sees" a slightly different view, and the brain uses these differences to estimate relative distance. These two views can be artificially generated by setting up a synthetic camera with two "eyes", each offset slightly from the n-axis. Each eye will result in a different projection. If each projection is displayed to the user in a different colour, and the user has appropriately filtered glasses, the 2D display will appear to have depth. Other 3D viewing system include Virtual reality headsets which have two in built displays, one for each eye or LCD goggles, the goggles block the right eye when the left eye image is being displayed on a large screen and visa- versa, this cycle must occur 50 times a second, if the animation is to be smooth.

We must define precisely the region in space that is to be projected and drawn. This region is called the view-volume. In the general case, only a small fraction of the model falls within the field of view of the camera, The part of the model that falls outside of the cameras views must be identified and discarded as soon as possible to avoid unnecessary computation.

The view volume is defined in viewing coordinates. The eye and the window defined on the view plane, together define a double sided pyramid extending forever in both directions. To limit the view volume to a finite size, we can define a front plane n=F and a back plane n=B these are sometimes known as the hither and yon planes. Now the view volume becomes a frustum (truncated pyramid).

We will later develop a clipping algorithm which will clip any part of the world which lies outside of the view volume. The effect of clipping to the front plane is to remove objects that lie behind the eye or too close to it. The effect of clipping to the back plane is to remove objects that are too far away, and would appear as indistinguishable spots. We can move the font and back plane close to each other to produce "cutaway" drawings of complex objects. Clipping against a volume like a frustum would be a complex process, but if we apply the perspective transformation too all our points, the clipping process will become trivial. The view volume is defined after the matrix \mathbf{\hat{M}}}_{wv} has been applied to each point in world coordinates. The effect of applying the perspective transformation is called pre-warping. If we apply pre-warping to the view volume, it gets distorted into a more managable shape.

We will first examine pre-warping effects on key points in the view volume. First we need to calculate the v-coordinate v_{2} of P_{2}(u_{2},v_{2},n_{2}) is an arbitrary point lying on the line from the eye through P_{1}(0,w_{t},0), where w_{t} represents the top of the window defined on the view plane:

So prewarping P_{2} gives us the point which lies on the plane v=w_{t}.

Therefore the effect of pre-warping is to transform all points on the plane representing the top of the view volume to a point on the plane v=w_{t}. this plane is parallel with the un plane.

It can be similarly shown that the other three side of the view volume are transformed to planes parallel to the coordinate planes.

If we take a point on the back plane P_{3}(u_{3},v_{3},B) and apply prewarping to the n-coordinate;

so we can see that the back plane has been moved to the plane n=\frac{B}{1-\frac{B}{e_{n}}}. This plane is parallel to the original plane.

Similarly the front plane will have been moved to n=\frac{F}{1-\frac{F}{e_{n}}}.

Applying prewarping to the eye gives n'=\frac{e_{n}e_{n}}{(e_{n}-e_{n})}=\infty. This means that the eye has been moved to infinity.

In summary, pre-warping has moved the walls of the frustum shaped view volume to the following planes;

Note that each of these planes are parallel to the coordinate axis.

The final stage of the transformation process is to map the projected points to their final position on the viewport on screen. We will combine this viewvolume-viewport mapping with the pre-warping matrix, this will allow us to perform all the necessary calculation to transform a point in world coordinates to pixel coordinates on screen in one matrix multiplication.

The u and v coordinates will be converted to x and y screen coordinates and to simplify calculation later we will scale the n coordinates (pseudo-depth) to a range between 0 and 1 (scale the front plane to 0 and the back plane to 1).

First we need to translate the view-volume to the origin, this can be done by applying the following translation matrix;

Next the view-volume needs to be scaled to the width and height of the viewport. At this stage we will normalize the pseudo-depth to a range of 0 to 1. To scale the n-coordinate ,we need to scale by;

Therefore the scaling matrix required is;

Finally we need to translate the scaled view volume to the position of the viewport;

Combining the above three transfomations gives us the Normalization Matrix;

We can now combine all our transformations into one overall matrix, which will convert a point from world coordinates to Normalized Device Coordinates(NDC) while retaining a representation of the relative depth of the points.

This will give us the view-volume in its final configuration, called the Canonical View Volume.

© Ken Power 2009